What you need to know about the Google BERT algorithm update

Google can now better understand the intent behind the phrases people search for, meaning it's more important than ever to write your website content for humans rather than robots. Find out more about how the Google BERT algorithm update impacts on your SEO strategy.

The Bidirectional Encoder Representations from Transformers, or BERT for short, is a Google Natural Language Processing (NLP) algorithm which was introduced to Google search on Oct 22, 2019.

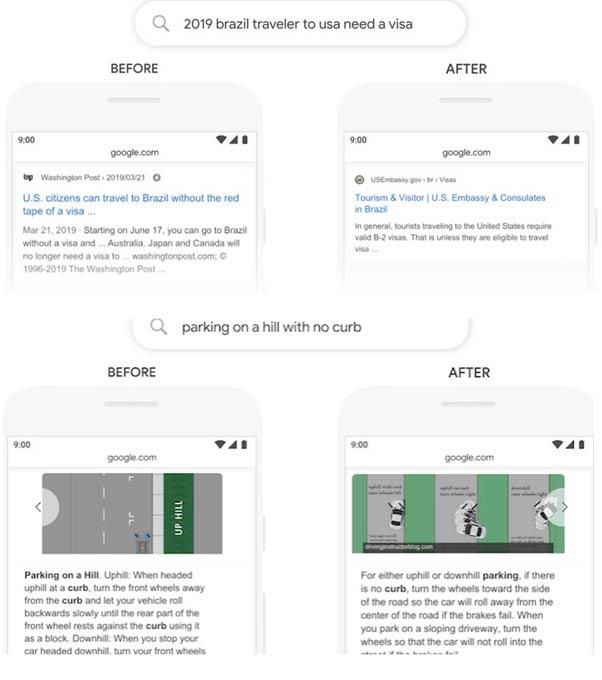

The BERT Google Algorithm update impacts featured snippets and organic rankings, affecting 1 in 10 of ALL search queries (mainly longer-tail queries).

What is BERT and why should you care?

BERT is an open-source, neural network-based framework produced by Google for NLP (and also a research project and academic paper).

Unlike previous NLP models such as Glove2Vec, and Word2Vec which were context-free, BERT is all about providing “context”. It aims to understand the intent behind the phrases people search for on Google to deliver the most relevant results, and in doing so, continue learning and improving itself when it comes to the intricacies of language.

The BERT upgrade mostly benefits long search queries, more ambiguous search queries and queries that use prepositions such as “to” and “for”. The screenshot example further below compares search results prior to the BERT upgrade and after. Before BERT, Google could not grasp the subtle nuance of more complexly worded long-string search queries; after BERT, Google has improved in this regard.

The greatest search improvements arising from BERT are expected to come to fruition in the realm of improving understanding of different languages and in voice search, which is generally more conversational than traditional search. For example: “Find me a plumber nearby.”

Typically, traditional search doesn’t involve long-string key phrases but rather typing in short key phrases, such as “Plumber near me”.

It’s important to note that while BERT represents a leap forward for NLP, it still has a long way to go. BERT is not particularly good at understanding what things are not. For example, if you were to input “a Great Dane is a…” it would predict “dog”, but if you typed “a Great Dane is not a…” it likely would still predict “dog”.

The main aim of BERT

In keeping with the main goal behind most of Google’s algorithm updates, the BERT upgrade helps Google better understand what you’re looking for when you type in a search query and aims to provide you with search results best aligned to what you are looking for.

BERT aims to provide direct answers to questions and informational queries, rather than appealing to keywords. Basically, BERT aims to allow you to search in a way that feels more natural to you.

Misconceptions

Since BERT was released there has been much hype about how “revolutionising” it is. In its present state though it is still far away from understanding language and context in the same way that we humans can understand it. Think of it this way: While the house isn’t yet built, the foundations have been laid and construction is ongoing. In its current form, BERT is a precursor of what’s to come.

University of Chicago professor, computational linguist and NLP researcher Allyson Ettinger has been studying NLP and BERT as part of her position at the university. She says: “We'll be heading on the same trajectory for a while, building bigger and better variants of BERT that are stronger in the ways that BERT is strong and probably with the same fundamental limitations”.

Language understanding remains an ongoing challenge, but as BERT continues to learn, is refined and expanded upon, it will become better at taking learnings from one language and applying them to others. So, as BERT improves at understanding the intricacies and complexities of the English language, it can apply what it’s learnt to improve it’s understanding of other languages to ultimately help provide people with more relevant results in various languages. Currently Hindi, Korean and Portuguese are seeing the biggest leaps in language improvements.

Can you optimise content to appeal to BERT?

You can't optimise websites for BERT per se. However, BERT makes it even more sensible to ensure your website contains excellent, unique content that aligns with what your prospective clients or customers are searching for and fulfills their needs.

From an SEO perspective, the main thing to keep in mind regarding BERT is that it better understands content without having to rely as heavily on keywords, so to tickle BERT’s fancy it’s wise to aim to write more natural-sounding content that appeals to the human eye rather than keyword-heavy, unnatural-sounding content.

As BERT mostly impacts longer-tail, question-based queries, creating content around specific questions and providing laser-focused, concise answers is arguably the biggest thing to focus on in order to appeal to BERT.

To help you get a better handle on SEO writing that appeals to NLP, visit BRIGGSBY.

For an in-depth explanation of the intricacies of NLP and Google’s BERT, check out the Search Engine Journal’s BERT Explained blog post. To learn more about what Bert can’t do, review the research paper What BERT is Not.

Other BERT versions

- Microsoft’s Multi-Task Deep Neural Network (T-DNN) incorporates BERT

- Facebook uses RoBERTA

- SuperGlue Benchmark

What's next, Kook?

A meeting with us costs NOTHING. Even if you have an inkling you aren't getting the results you'd expect, let's have a chat.